Welcome to a deep dive into the art of deconstructing analysis techniques! Whether you’re a seasoned researcher or just starting your journey into the world of analysis, this blog post is your ultimate guide to unraveling the layers of text and film. Get ready to sharpen your analytical skills and uncover hidden insights as we explore the intricate process of breaking down complex content. Let’s embark on this enlightening journey together!

Deconstructing Analysis Techniques

Are you ready to master the art of deconstructing analysis techniques? Delve into the intricate world of dissecting text and film with precision and insight. Understanding the underlying layers of content can unlock a wealth of knowledge and understanding.

In this blog post, we will explore the steps involved in conducting a thorough analysis, from uncovering key themes to deciphering complex narratives. By honing your analytical skills, you can extract valuable insights that may have remained hidden at first glance.

Discover how analyzing top papers can provide profound insights into different methodologies and approaches. Stay ahead by keeping an eye on trending topics and discussions within your field of interest.

Join us as we unravel the mysteries of analysis techniques, empowering you to approach texts and films with a critical eye and a keen sense of observation. Let’s embark on this enlightening journey together!

The Art of Deconstruction

Deconstruction is like unraveling a mystery, peeling back each layer to uncover hidden meanings and perspectives. It’s not just about what you see on the surface but delving deeper into the nuances within a text or work of art.

The art of deconstruction challenges us to question assumptions, challenge established norms, and explore alternative interpretations. It invites us to think critically, analyze intricately, and engage with content in a more profound way.

By breaking down complex ideas into their fundamental components, deconstruction allows us to gain new insights and appreciate the richness of different viewpoints. It encourages us to embrace ambiguity, embrace diversity in thoughts, and open our minds to endless possibilities.

In essence, the art of deconstruction is an intellectual adventure that pushes boundaries, sparks creativity, and expands our understanding of the world around us.

Recommended Readings

Looking for some recommended readings to dive deeper into the art of deconstruction analysis techniques? You’re in luck! Here are a few titles that will expand your understanding and sharpen your analytical skills.

“An Introduction to Semiotics” by Jonathan Culler is a classic text that explores the study of signs and symbols, helping you decode hidden meanings in texts. For a more practical approach, “How to Read Literature Like a Professor” by Thomas C. Foster offers insightful tips on literary analysis techniques.

If you’re interested in film analysis specifically, “In the Blink of an Eye” by Walter Murch delves into the world of editing and visual storytelling. And for a broader look at critical theory, “Literary Theory: An Introduction” by Terry Eagleton provides a solid foundation.

These recommended readings will provide valuable insights and perspectives to enhance your analysis skills. Happy reading!

Episode Transcript

Ever wished you could revisit the insightful discussions from your favorite podcast episodes? The Episode Transcript section allows you to do just that. Dive deep into the content, capture key points, and easily reference them later on.

Transcripts offer a valuable resource for those who prefer reading over listening or simply want to review specific details. Whether it’s a profound quote or a critical analysis shared by the host, having the transcript at your fingertips enhances your overall experience.

This section bridges the gap between auditory and visual learners, catering to diverse preferences in consuming information. It empowers you to engage with the material more actively and extract maximum value from each episode. No longer will important insights slip away – they’re right there in black and white for you to absorb at your own pace.

Recommended Products

Looking to enhance your analysis techniques further? Consider these recommended products that can take your deconstruction skills to the next level.

First up, investing in a high-quality notebook or digital note-taking tool can help you organize your thoughts and observations efficiently. Having a dedicated space to jot down key points while analyzing texts is essential.

Additionally, consider purchasing books on critical theory and analytical methods. These resources can provide valuable insights and frameworks to deepen your understanding of different analysis techniques.

For visual learners, acquiring a subscription to online platforms offering film analysis courses or access to scholarly articles can broaden your perspective on deconstructing visual media effectively.

Don’t forget about software tools that assist in data visualization and textual analysis. These tools can streamline the process of dissecting complex information for more insightful conclusions.

Steps in a Deconstruction Analysis

When diving into deconstruction analysis, start by exploring answers from the top 5 papers on the subject. This can provide valuable insights and different perspectives that enrich your understanding of the topic.

Another approach is to see what other people are reading regarding deconstruction analysis. It’s important to stay updated with current trends and discussions in order to broaden your knowledge base.

Consider delving into my columns for in-depth analyses and thought-provoking content related to deconstructing various topics. These resources can offer fresh ideas and unique viewpoints that enhance your analytical skills.

Don’t forget to explore related questions surrounding deconstruction analysis. Engaging with these inquiries can spark new ideas and avenues for exploration, leading to a deeper understanding of this complex analytical technique.

Answers from top 5 papers

Delving into the analysis of top papers can provide valuable insights and perspectives. By examining these well-crafted pieces, you can grasp different approaches to deconstruction techniques. Each paper offers a unique viewpoint, allowing you to broaden your understanding of analysis methods.

Analyzing the top 5 papers brings diverse interpretations and methodologies to light. From literary critiques to scientific research, each paper presents a rich tapestry of analytical strategies. Comparing and contrasting these approaches can enhance your own analytical skills and critical thinking abilities.

Exploring the nuances in these top papers uncovers hidden gems of information that may inspire new ways of approaching analysis. As you immerse yourself in the content of each paper, pay attention to the subtleties and intricacies that contribute to their effectiveness.

By scrutinizing the analyses presented in these top papers, you gain a deeper appreciation for the art of deconstruction. Embrace this opportunity to learn from experts in various fields and incorporate their techniques into your own analytical toolbox.

See what other people are reading

Curious about what’s trending in the world of analysis techniques? Delve into what other avid readers and researchers are exploring. By seeing what piques their interest, you might discover new perspectives, methodologies, or even hidden gems that could enhance your own analytical skills.

Exploring the literature others are engaging with can spark inspiration and offer fresh insights. It’s like taking a peek into a vast library where each book holds untapped knowledge waiting to be uncovered. Embrace the opportunity to broaden your horizons by following the trail of popular analysis topics circulating within academic circles.

Analyzing the reading preferences of others can unveil patterns and emerging trends in various fields. Stay informed about current discourse and research directions by observing what captures the attention of fellow enthusiasts. This window into collective interests can guide you towards valuable resources and thought-provoking discussions.

Engage with diverse content that resonates with peers in your field or across disciplines. By keeping an eye on trending reads, you open doors to collaborative opportunities, shared insights, and potential breakthroughs in your analytical journey. Dive into this dynamic pool of knowledge-sharing to expand your intellectual repertoire effortlessly.

Related Questions

Curious minds often seek more, diving deeper into the realm of analysis techniques. Related questions spark new avenues for exploration, leading to a richer understanding of the subject matter. These inquiries act as breadcrumbs, guiding us along an intellectual journey filled with discovery and insight.

Exploring related questions can unveil hidden connections and provoke thought-provoking discussions. They serve as gateways to unlocking complexities and nuances within the analytical process. Embracing these queries opens up opportunities for critical thinking and innovative perspectives.

Intriguingly, delving into related questions not only broadens our knowledge but also sharpens our analytical skills. As we ponder over these inquiries, we refine our ability to deconstruct texts with precision and clarity. Each question acts as a puzzle piece that contributes to the larger picture of comprehensive analysis.

So, next time you encounter related questions in your analytical pursuits, embrace them wholeheartedly. Dive deep into their depths, unraveling layers of meaning and interpretation that enrich your scholarly endeavors.

Understanding Analysis

Understanding analysis is like deciphering a complex puzzle. It involves unraveling layers of information to reveal the underlying meaning. Each piece contributes to the bigger picture, guiding you through a maze of thoughts and ideas.

Analyzing text requires attention to detail, picking up on subtle cues that may hold significance. It’s about delving beyond the surface and exploring the deeper implications hidden within words.

Every analysis is an opportunity to delve into different perspectives, challenging your own beliefs and expanding your understanding. It’s a journey of discovery, where each insight gained adds another dimension to your comprehension.

Interpreting data through analysis allows you to see patterns emerge, connecting dots that were once scattered. It’s about piecing together fragments of information to construct a coherent narrative that sheds light on the subject at hand.

Techniques for Analyzing any Text

Analyzing any text requires a keen eye and a systematic approach. Start by reading the text multiple times, noting down key points, themes, and patterns that emerge. Look for recurring motifs or symbols that might hold deeper meaning. Pay attention to the author’s choice of words, sentence structure, and tone to uncover underlying messages.

Consider the context in which the text was written – historical background, cultural influences, and author’s intentions can all shape interpretation. Don’t shy away from exploring different perspectives or interpretations; analyzing a text is about delving into its complexities.

Utilize literary devices like imagery, metaphors, and foreshadowing to decode layers of meaning within the text. Connect these elements back to the central theme or thesis of the piece for a comprehensive analysis. Discuss your findings with peers or mentors to gain new insights and broaden your understanding.

Incorporate outside research or critical reviews to enhance your analysis further. By employing these techniques thoughtfully and critically engaging with texts on multiple levels can lead you to profound revelations hidden within their pages.

License

“License” in the realm of analysis techniques refers to the permissions or rights granted for using certain materials, such as texts, images, or data. Understanding licensing agreements is crucial when conducting detailed analyses. It ensures that you are compliant with copyright laws and regulations while dissecting content.

When delving into analysis work, always be mindful of the licenses attached to the resources you are utilizing. Different licenses may dictate how you can quote, reference, or reproduce material within your analysis. By adhering to these guidelines responsibly, you maintain integrity in your research process.

Acknowledging license terms also showcases respect for intellectual property rights and creators’ works. It demonstrates ethical conduct and professionalism in your analytical endeavors. Remember: a clear understanding of licensing ensures credibility and authenticity in your analysis outcomes.

Deconstructing a Film Analysis Essay: Tips and Techniques

When it comes to deconstructing a film for analysis, there are several key tips and techniques to keep in mind. Remember that watching the movie once might not be enough – multiple viewings can unveil hidden details and nuances you may have missed initially.

To start your analysis essay, consider utilizing the three-act structure commonly found in films. Take notes while watching the movie to capture important scenes, dialogues, and visual elements that contribute to its overall impact.

Visual components like camera work, lighting choices, costumes, makeup, and props all play a significant role in conveying the film’s message. Pay attention to timing as well; the pacing of scenes can influence how audiences perceive certain moments.

In crafting your essay structure, ensure you include an engaging introduction that sets the tone for your analysis. Develop body paragraphs that delve into specific aspects of the film with supporting evidence from your observations.

When concluding your analysis essay on a film, reiterate key points without introducing new information. If needed before submission for academic purposes or professional critique seek assistance from an experienced editor to refine your work further.

One watch isn’t enough

Watching a film for the first time is like dipping your toes in an ocean of storytelling. The surface may sparkle with intrigue, but it’s the depths that hold the true treasures. One watch isn’t enough to absorb all the nuances and layers woven into a cinematic masterpiece.

Each frame is crafted with purpose, each line of dialogue laden with meaning. A single viewing merely scratches the surface of what lies beneath. It’s akin to skimming a book instead of delving deep into its pages; you might grasp the plot, but you miss out on the intricate details that enrich the narrative.

To truly appreciate a film’s complexity, multiple viewings are essential. Each rewatch peels back another layer, revealing hidden symbolism, foreshadowing clues, and subtle character developments that evade casual observation.

So next time you’re tempted to dismiss a film after one viewing, remember – there’s always more beneath the surface waiting to be discovered. Dive deeper and let each watch unveil new insights that enrich your cinematic experience beyond imagination.

The three-act structure

Understanding the three-act structure is crucial in deconstructing analysis techniques. Act one sets the stage, introduces characters, and establishes the main conflict. It’s where the story begins to unfold and captivate the audience.

Act two is all about development – complications arise, tension escalates, and characters face challenges that push the narrative forward. This section keeps viewers engaged and invested in what will happen next.

Act three brings resolution – conflicts are resolved, loose ends tied up, and conclusions drawn. It’s where everything comes together for a satisfying conclusion or leaves room for interpretation.

Mastering the three-act structure allows analysts to dissect narratives effectively by understanding how each part contributes to the overall storytelling arc.

Note-taking is a must

Taking notes is a fundamental aspect of deconstructing analysis techniques. When diving into the intricate details of a text or film, jotting down key points can help you stay organized and focused.

By documenting important elements as you go along, you create a roadmap for your analysis process. This allows you to refer back to specific details easily and ensures nothing crucial slips through the cracks.

Whether it’s identifying symbolism in a novel or dissecting cinematography choices in a movie, having detailed notes at hand provides valuable insights during the analytical journey.

Remember that note-taking isn’t just about recording information; it’s about actively engaging with the material. Make connections, ask questions, and challenge assumptions as you write down your observations.

So grab your pen and paper or fire up your digital notebook – because when it comes to unraveling complex analyses, meticulous note-taking is indeed non-negotiable.

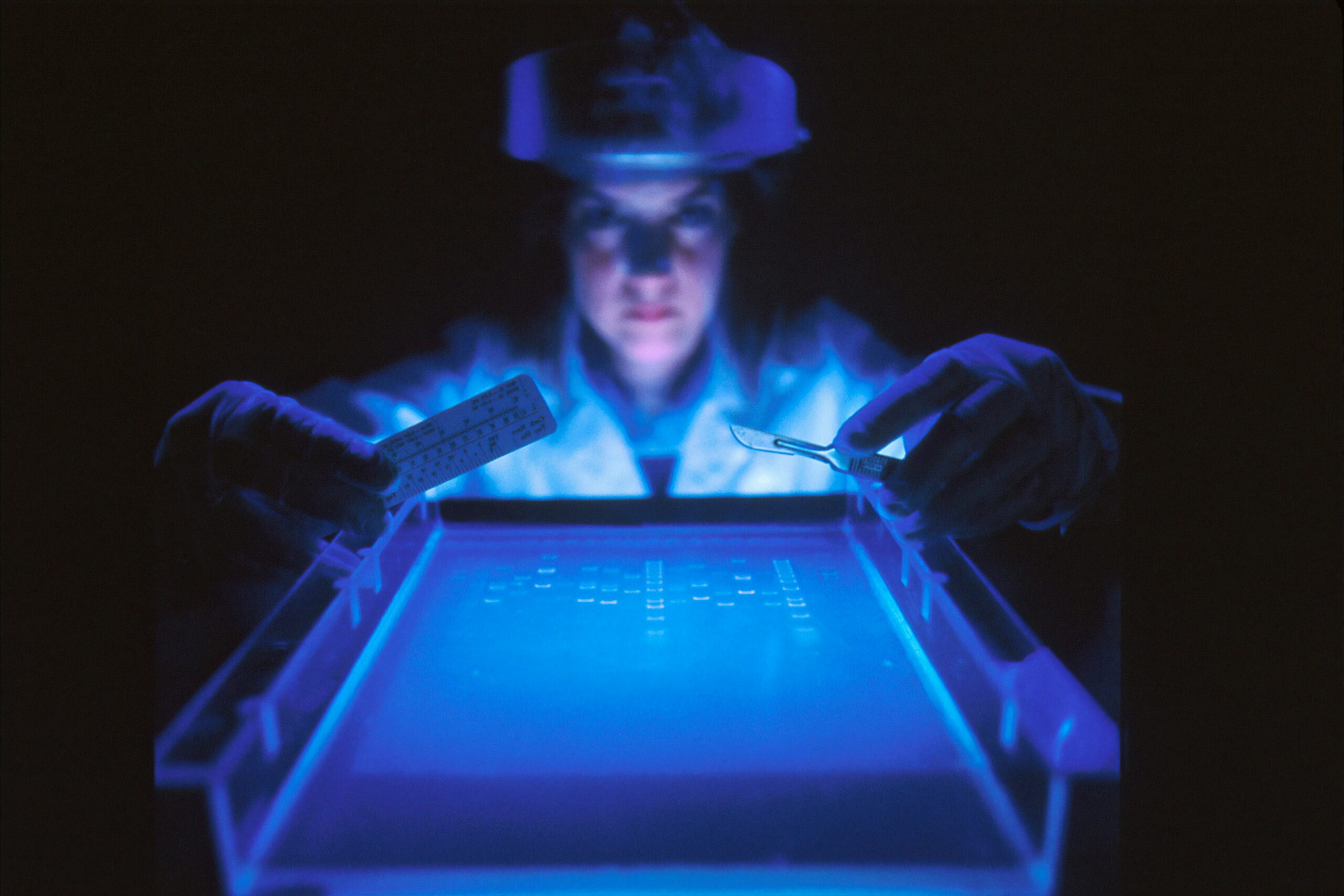

Visual elements to analyze

When deconstructing visual elements in an analysis, pay attention to the color palette used. Colors can evoke emotions and convey themes subtly. Notice how different hues are employed throughout the film or artwork.

Another crucial aspect is the composition of each frame or scene. Consider the placement of characters and objects within the frame. How does this arrangement contribute to the overall storytelling?

Lighting plays a vital role in setting mood and atmosphere. Analyze how light is used, whether it’s natural lighting, harsh shadows, or soft illumination. These choices can significantly impact the viewer’s perception.

Don’t forget about camera angles and movements; they influence perspective and engagement with the audience. Take note of any unique shots or camera techniques employed that enhance the narrative.

Examine any visual motifs or symbols present throughout the piece. These recurring images often hold deeper meaning and add layers to your analysis.

Your essay structure

Crafting a well-structured essay is essential when deconstructing analysis techniques. Your essay structure serves as the backbone of your analysis, guiding readers through your insights and interpretations.

Start with a clear introduction that sets the stage for what will be discussed in your analysis. This section should provide an overview of the film or text you are analyzing while also introducing the main themes or points you will explore.

In the body paragraphs, delve deeper into your analysis by breaking down key scenes or passages. Each paragraph should focus on a specific aspect of the film or text, supporting your points with evidence and examples to strengthen your argument.

Transition smoothly between paragraphs to maintain a coherent flow in your analysis. Use linking words and phrases to connect ideas and ensure that each point leads logically to the next.

Wrap up your essay with a strong conclusion that summarizes your main findings without simply restating them. Leave readers with a thought-provoking insight or question to ponder after reading your analysis.

Camera work

When it comes to analyzing a film, paying attention to camera work is crucial. The way a scene is framed can convey emotions and enhance storytelling. Camera angles, movement, and focus all play a role in shaping the viewer’s experience.

The choice of whether to use close-ups or wide shots can impact how the audience connects with the characters and the plot. Each camera movement serves a purpose – tracking shots create fluidity while jump cuts can evoke tension or disorientation.

Consider how lighting interacts with the camera work to set the mood. Shadows and highlights can add depth and dimension to a scene, influencing the overall atmosphere.

Camera work isn’t just about technical aspects; it’s also about artistic expression. Directors use framing and composition creatively to engage viewers on an emotional level. Paying attention to these details can elevate your analysis of a film significantly.

Lighting

When analyzing lighting in a film, pay attention to how it sets the mood and enhances the narrative. Lighting can create depth, shadows, and emphasize certain elements within a scene.

Consider how natural light versus artificial light is used to convey different emotions or themes. Soft lighting may suggest intimacy, while harsh lighting can evoke tension or drama.

Notice the color temperature of the lights – warm tones often create a cozy atmosphere, while cool tones may indicate suspense or detachment.

Keep an eye out for creative use of shadows and silhouettes that add layers of symbolism or visual interest to a scene. The interplay between light and shadow can reveal underlying subtexts in the story.

Remember that lighting is not just about visibility; it’s a powerful tool for storytelling in visual media. Paying attention to how light is utilized can uncover hidden meanings and enhance your analysis skills when deconstructing films.

Costumes, makeup, and props

Costumes, makeup, and props play a crucial role in deconstructing film analysis techniques. When watching a movie, pay attention to the details in the costumes worn by the characters. The clothing choices can reflect their personality, status, or even foreshadow events.

Similarly, analyzing makeup can provide insight into character development and emotions. Subtle changes in makeup throughout a film can indicate transformations or inner conflicts within a character.

Props are not just objects on set; they hold symbolic meanings that contribute to the narrative. Notice how certain props are used repeatedly or stand out in key scenes—they often carry deeper significance.

By dissecting the choices made regarding costumes, makeup, and props, viewers can uncover layers of storytelling that may not be immediately apparent. So next time you watch a film for analysis purposes, keep an eye out for these intricate details that add depth to the cinematic experience.

Timing

Timing is crucial when deconstructing analysis techniques in a film. It’s not just about the length of scenes but also the pacing throughout the movie. The rhythm created by the timing of events can evoke specific emotions or build tension.

Consider how long each shot lasts and how it contributes to the overall narrative flow. Quick cuts may indicate urgency or chaos, while longer shots can convey contemplation or significance.

Pay attention to when certain events occur in relation to one another. The sequence of scenes can impact the viewer’s understanding and interpretation of the story.

Timing isn’t just about runtime; it’s about every moment captured on screen and its impact on the audience’s experience. So, next time you analyze a film, remember that even a fraction of a second can make a difference in how we perceive what unfolds before us.

What to include in the introduction

When crafting the introduction of your analysis essay, it’s crucial to set the stage for what lies ahead. Start by providing a brief overview of the film you’ll be analyzing, giving readers a glimpse into its storyline and main themes. Consider mentioning the director, year of release, and any significant background information that adds context.

Next, clearly state your thesis statement – this is the central argument you will be exploring throughout your analysis. It should be concise yet compelling, guiding readers on what to expect in terms of your interpretation and insights into the film.

Additionally, consider including some general observations about the overall impact or significance of the movie. This can help pique readers’ interest and provide a broader perspective before delving into detailed analysis later on.

Remember to maintain a smooth transition from your introduction to the body paragraphs where you’ll support your thesis with evidence from the film. A well-crafted introduction not only grabs attention but also lays a strong foundation for an engaging and insightful analysis essay.

Body paragraph analysis

When it comes to analyzing body paragraphs in your essay, focus on dissecting the core arguments presented. Look at how each paragraph contributes to the overall thesis statement and supports the main idea. Pay attention to transitions between paragraphs for a seamless flow of ideas.

Each body paragraph should have a clear topic sentence that introduces the main point. Analyze how supporting details and evidence are used to strengthen this central idea. Consider the structure within each paragraph – does it follow a logical progression?

Examine the language and writing style employed in each body paragraph. Note any persuasive techniques or rhetorical devices used to sway the reader’s opinion. Evaluate how well these elements enhance the argument being made.

Take note of any counterarguments presented within the body paragraphs and analyze how they are addressed or refuted by the writer. Consider whether alternative viewpoints are acknowledged and effectively countered.

Remember, a thorough analysis of body paragraphs involves digging deep into not just what is said but also how it is conveyed and supported throughout your essay’s argumentation process.

Concluding your analysis essay

You’ve analyzed every aspect of the film, delving into its intricacies and unraveling its hidden layers. Your analysis essay is a masterpiece that captures the essence of the cinematographic work you studied. Concluding your analysis essay requires finesse and precision, tying together all your observations into a cohesive whole.

As you reach the end of your essay, reflect on the impact of your findings and how they contribute to a deeper understanding of the film. Your conclusion should leave readers with a lasting impression, provoking thoughts and sparking discussions long after they finish reading.

Consider reiterating key points from your analysis, reinforcing their significance in shaping the narrative or thematic elements of the film. End on a strong note that resonates with your audience, leaving them pondering about the film’s complexities and nuances even after they have closed the pages of your essay.

Need an academic editor before submitting your work?

Before you finalize and submit your analysis, consider the value of having an academic editor review your work. A fresh pair of eyes can provide valuable insights, catch any overlooked errors, and ensure that your analysis is polished to perfection. Whether it’s for clarity, structure, or formatting, an academic editor can help elevate your work to the next level.

Remember, investing in professional editing services can make a significant difference in how your analysis is received by readers or evaluators. So why not take that extra step to ensure that your hard work shines through without any distractions? Consider reaching out to an academic editor today and watch as your analysis transforms into a refined piece of scholarly excellence.